The AI dilemma: friend or foe?

The use of artificial intelligence (AI) is on the rise but opinions are divided. And when it comes to cyber security, it could hardly be more controversial. The consequences of immature AI systems and the use of AI for criminal intent are far-reaching. But can we always tell when we’re being duped?

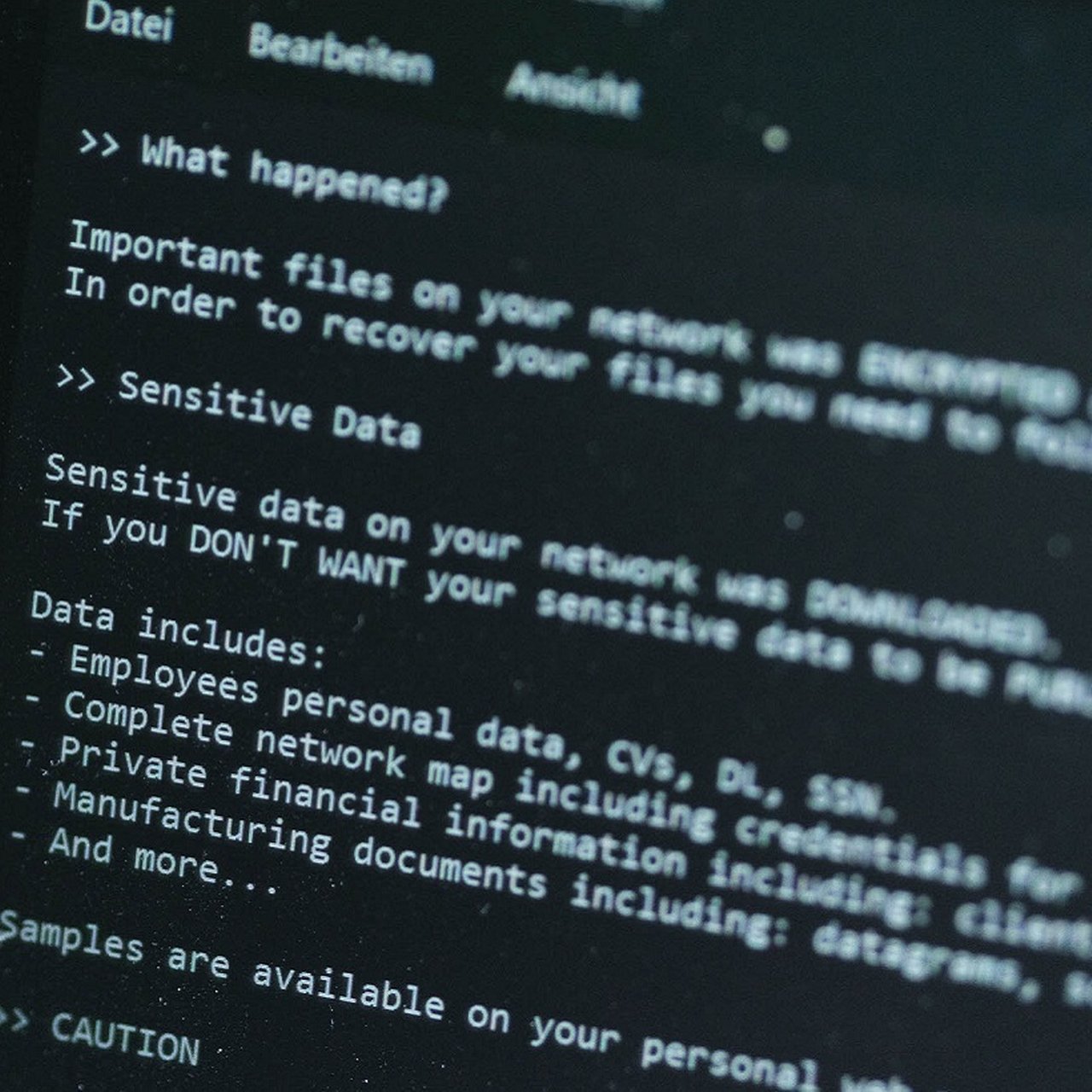

New levels of cyber crime

The rapid development of AI not only promises progress in many areas, but also poses considerable risks, for example for cyber security. Cyber criminals can do so much damage with AI. Even inexperienced hackers can use AI tools to carry out sophisticated attacks. These tools can automatically detect and exploit vulnerabilities in systems and there has been a sharp increase in the number of cyber attacks.

According to Statista, almost 17 million cyber crimes were registered worldwide in 2023. The UK's Cybersecurity Centre expects the volume and impact of cyber attacks to increase over the next two years as a result of AI being used. The previous peak of more than 19 million attacks in 2021 could be equalled or exceeded.

Wolf in sheep's clothing

The biggest risks in the next two years are misinformation and disinformation, according to the World Economic Forum's "Global Risk Report 2024". These risks are heightened because generative AI is increasingly being used to create "synthetic content", such as voice clones, fake videos (deepfake) and websites that are almost indistinguishable from real ones. It’s a wolf in sheep’s clothes, a camouflaged danger.

Looking ahead to the next ten years, disinformation and misinformation rank fifth and the impact of AI ranks sixth, right after four risks related to climate change.

Dangerous search engines

Immature AI search systems can spread false information even faster. A good example: Google AI suggested to a user to stick cheese on a pizza – with glue. We can chuckle at such an obvious trick, but the fact that these systems are apparently susceptible to manipulation is a problem. They are often unable to detect and filter out fake content and so circulate false but very convincing information. And this is already having a noticeable impact on our societies: trust is being undermined. One example of this is the use of AI to influence elections with fake news. This is a direct threat to democracy.

Younger people trust AI more, older people are more sceptical. Regardless of age, it becomes even more important for us humans to check information for missing or incorrect sources or inconsistencies, to question them and to consult several trustworthy sources.

Many ways to deceive

Besides misinformation and disinformation – either as text, images or moving images – and immature AI search engines, fraudsters also use AI to dupe and swindle people. And they’re doing it in a whole range of different ways. They use fake images and text to create phishing emails, websites or fraudulent social media profiles, usually with the aim of stealing money and/or data.

Genuine looking videos and kosher-sounding voice clones makes it easier for fraudsters to scam and blackmail people, for example by making their victims believe a family member is in distress, luring them into an emotional trap. Another example is AI-driven chatbots that seem so human that people trust them with their passwords or PINs. Whatever the method, they are becoming more and more sophisticated and it’s really difficult to tell the difference between real and fake. Detecting authenticity is becoming an important skill.

Safeguard

AI is not only used to scam people, but also for the opposite: to prevent and defend against fraud. The possible applications here are also diverse. AI systems immediately detect fraud attempts and can automatically prevent damage; they can analyse and process large amounts of data effectively. Without AI, many security strategies are based on static rules. Because AI can continuously learn, it can also deal with new threats more quickly. Smaller companies can also benefit from more security through AI.

AI does not replace humans and cannot be used meaningfully without them as it is less prone to errors when working with humans. By detecting threats quickly and easily, this can reduce costs and increase efficiency. According to a report by IBM, companies that use security AI and automation extensively save an average of 2.22 million US dollars compared to those that don't.

This page was published in 10/2024.

Katrin Palm

... is responsible for digital content and campaigning in Deutsche Bank’s Comms & CSR department and is fascinated by the possibilities of AI. She also sees the need, however, to provide more information on how it can be misused – especially when it comes to staying safe online – and to keep up to date on the different ways we can prevent misuse.

Recommended content

Digital Disruption | Photo Story

“The attack brought us closer” “The attack brought us closer”

The attackers hit shortly before Christmas. How our client, office supply store Schäfer Shop, got business back up and running after having fallen victim to a cyber attack.

Digital Disruption | Opinion

”We operate in a zero-trust environment.“ ”We operate in a zero-trust environment“

Cybercriminals look for weak points in value chains and use them as a gateway, for data theft or blackmail. At the same time, the cyber skills gap is growing, warns WEF expert Gretchen Bueermann.

Digital Disruption | Insights

The convenience of digital banking with the assurance of security The convenience of digital banking with the assurance of security

Cyber expert Pinakin Dave explains why cybercrime is on the rise and how his company "OneSpan" is helping reduce risks to consumers and keep financial transactions safe.